We have three new publications out, as always on a variety of topics!

- Estimating affective polarization on a social network, by Marilena Hohmann and Michele Coscia, published in PLOS ONE.

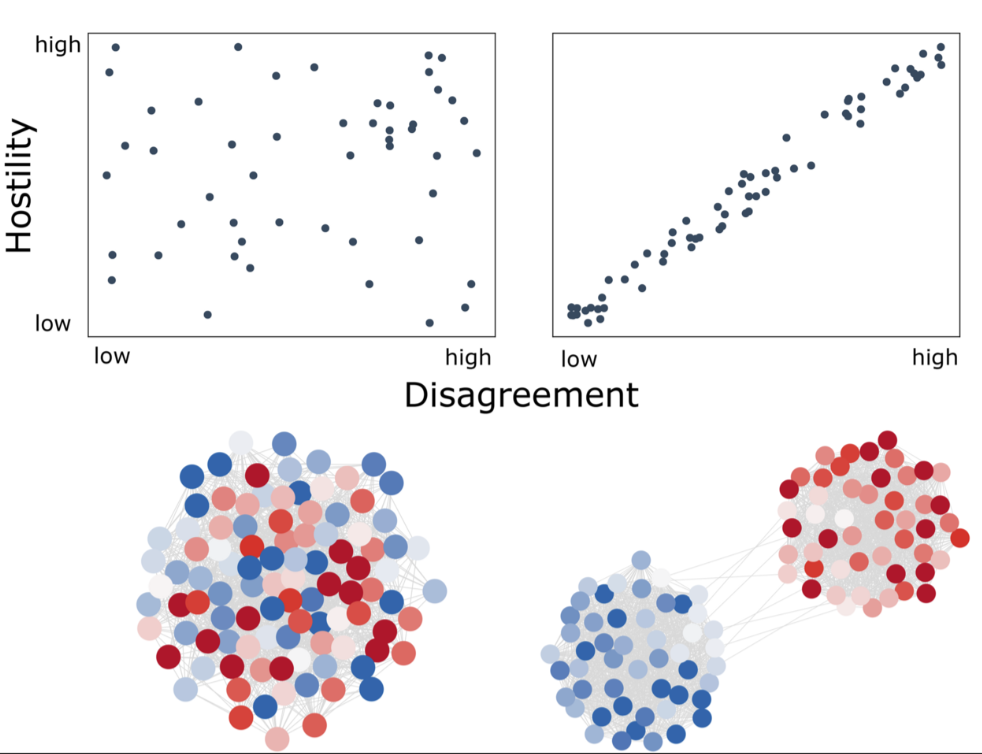

Concerns about polarization and hate speech on social media are widespread. Affective polarization, i.e., hostility among partisans, is crucial in this regard as it links political disagreements to hostile language online. However, only a few methods are available to measure how affectively polarized an online debate is, and the existing approaches do not investigate jointly two defining features of affective polarization: hostility and social distance. To address this methodological gap, we propose a network-based measure of affective polarization that combines both aspects – which allows them to be studied independently. We show that our measure accurately captures the relation between the level of disagreement and the hostility expressed towards others (affective component) and whom individuals choose to interact with or avoid (social distance component). Applying our measure to a large-scale Twitter data set on COVID-19, we find that affective polarization was low in February 2020 and increased to high levels as more users joined the Twitter discussion in the following months.

See also Michele’s blog post: https://www.michelecoscia.com/?p=2466 - Leveraging VLLMs for Visual Clustering: Image-to-Text Mapping Shows Increased Semantic Capabilities and Interpretability, by Luigi Arminio, Matteo Magnani, Matías Piqueras, Luca Rossi, and Alexandra Segerberg published in Social Science Computer Review.

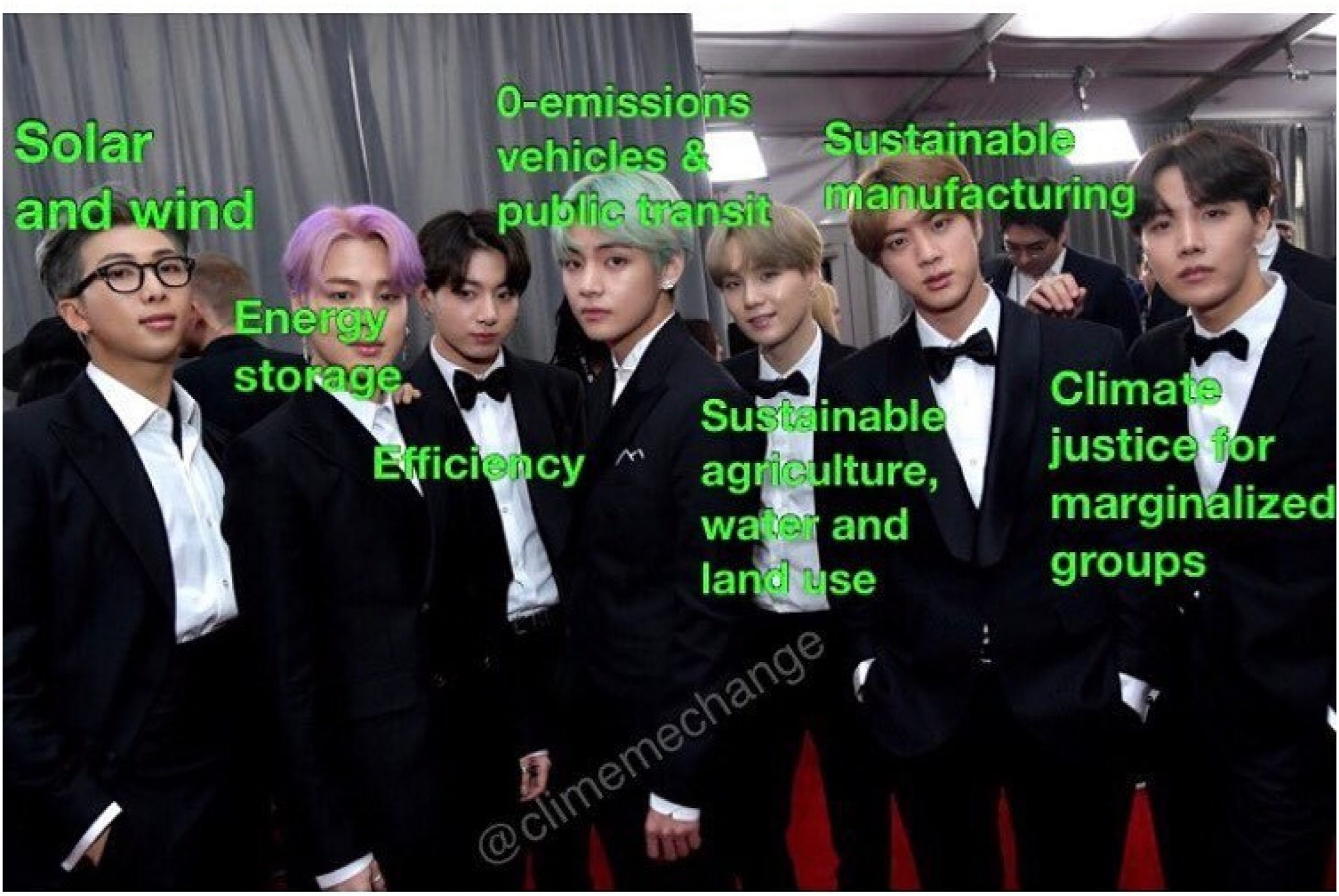

We test an approach that leverages the ability of Vision-and-Large-Language-Models (VLLMs) to generate image descriptions that incorporate connotative interpretations of the input images. In particular, we use a VLLM to generate connotative textual descriptions of a set of images related to climate debate, and cluster the images based on these textual descriptions. In parallel, we cluster the same images using a more traditional approach based on CNNs. In doing so, we compare the connotative semantic validity of clusters generated using VLLMs with those produced using CNNs, and assess their interpretability. The results show that the approach based on VLLMs greatly improves the quality score for connotative clustering. Moreover, VLLM-based approaches, leveraging textual information as a step towards clustering, offer a high level of interpretability of the results. - Mapping Stakeholder Needs to Multi-Sided Fairness in Candidate Recommendation for Algorithmic Hiring, by Mesut Kaya and Toine Bogers, published in RecSys ’25: Proceedings of the Nineteenth ACM Conference on Recommender Systems

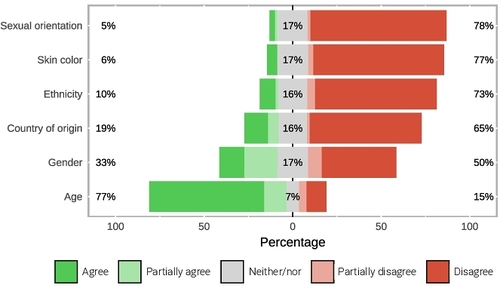

Past analyses of fairness in algorithmic hiring have been restricted to single-side fairness, ignoring the perspectives of the other stakeholders. In this paper, we address this gap and present a multi-stakeholder approach to fairness in a candidate recommender system that recommends relevant candidate CVs to human recruiters in a human-in-the-loop algorithmic hiring scenario. We conducted semi-structured interviews with 40 different stakeholders (job seekers, companies, recruiters, and other job portal employees). We used these interviews to explore their lived experiences of unfairness in hiring, co-design definitions of fairness as well as metrics that might capture these experiences. Finally, we attempt to reconcile and map these different (and sometimes conflicting) perspectives and definitions to existing (categories of) fairness metrics that are relevant for our candidate recommendation scenario.